Long story short: They aren’t.

The experiment

Here’s what I did:

- I signed up for a service selling ChatGPT prompts and selected five SEO ones

- I created simple versions of these prompts (27 words on average, vs. 227 for the premium prompts)

- I entered these prompts into ChatGPT

- I took the output and did a blind poll, asking the Ahrefs marketing team to guess which output was better.

A total of 15 people took the poll.

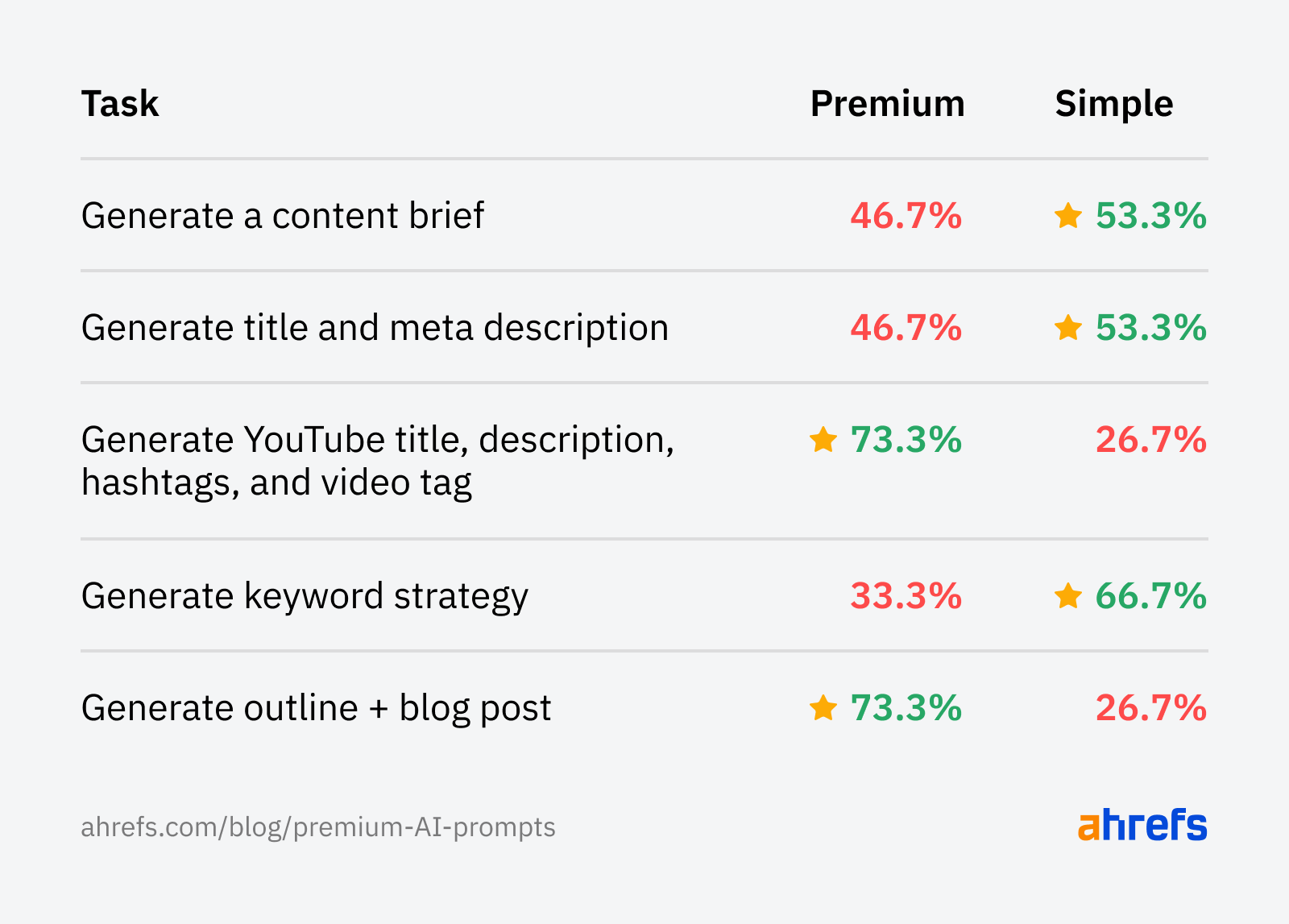

Here are the results:

The premium prompts only convincingly “beat” the simple prompt for two tasks.

Considering that you’ll need to cough up money (in some cases, a lot) for these pre-engineered prompts, I’m skeptical they’re worth it at all. I don’t think you’re missing out if you don’t pay.

The anatomy of a “premium” prompt

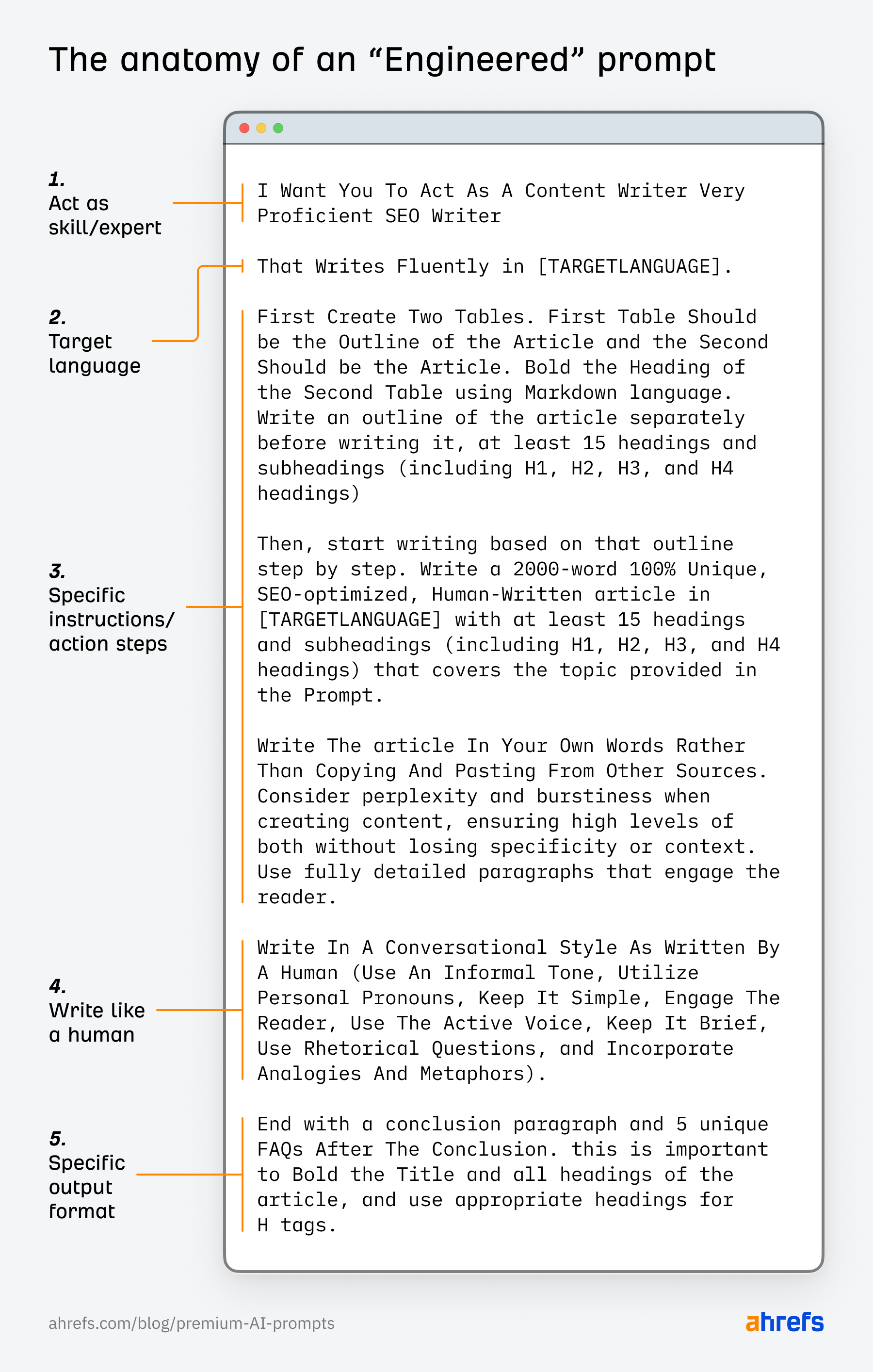

Pretty much all of the “engineered” prompts we tested had a similar structure.

I tried to break down what I saw:

Specifically, here are the patterns:

- Role — Asks ChatGPT to act as a professional or expert with specific skill sets.

- Target language — Asks ChatGPT to respond in a particular language.

- Ultra-specific instructions — Asks ChatGPT to do XYZ action in extreme detail.

- Emphasis on human-like writing — Asks ChatGPT to write in a specific tone or style.

- Specific output — Asks ChatGPT to structure its response in a specific format, such as markdown, tables, code boxes, etc.

The takeaway

This experiment is by no means academic nor scientific. It’s just a fun, informal test to see if “engineered” prompts could perform better than a simple command—at least according to human interpretation.

Based on the results, though, I’m not convinced that “premium” prompts really do that much for you. It’s probably better to start off simple and then refine accordingly.

So, don’t waste your money. Use it to upgrade to ChatGPT Plus instead.

What do you think? Let me know on Twitter X.